Background

Docker-in-Docker requires privileged mode to function, which is a significant security concern. Docker deamons have root privileges, which makes them a preferred target for attackers. In its earliest releases, Kubernetes offered compatibility with one container runtime: Docker.

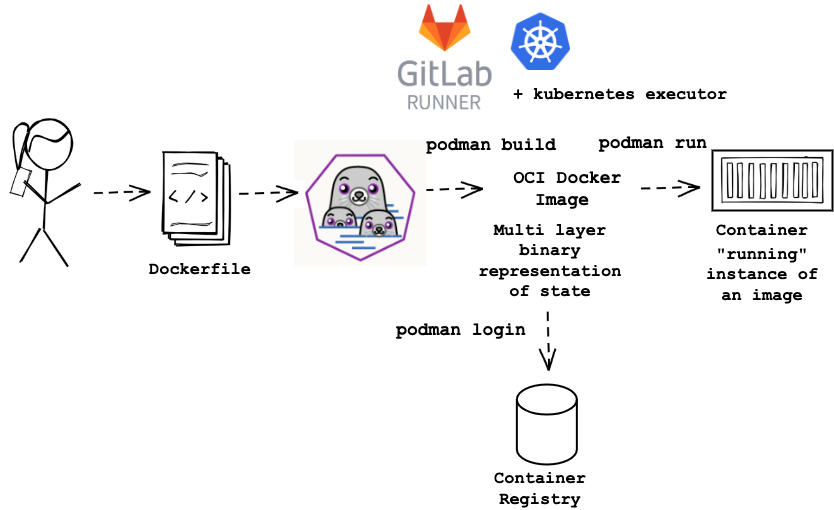

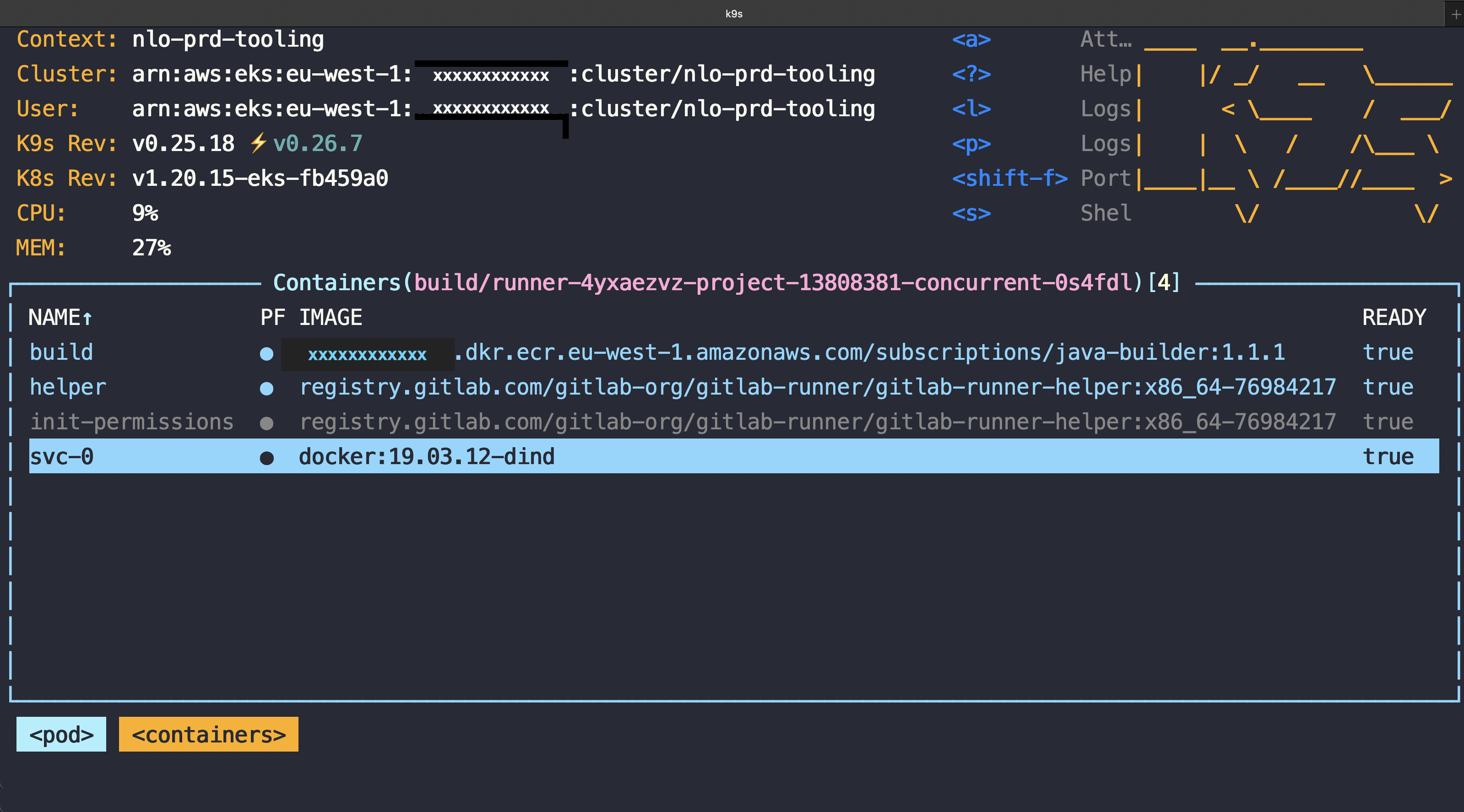

In the context of GitLab CI/CD jobs which build and publish Docker images to a container registry, docker commands in scripts (build.sh, publish.sh, promote.sh, hotfix.sh) referenced in your pipeline definition (.gitlab-ci.yml file) might seem like an obvious choice. At least that’s what I’ve encountered in a recent assignment, doing CI/CD with GitLab and deploying services to Kubernetes (AWS EKS). In order to enable docker commands in your CI/CD jobs, one option at hand is using Docker-in-Docker, note the inclusion of the docker:19.03.12-dind service in .gitlab-ci.yml file:

build:

stage: build

image: xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/java-builder:1.1.1

services:

- docker:19.03.12-dind

script:

- deployment/build.sh

[Listing 1 - Snippet from subscriptions/.gitlab-ci.yml file: build step with docker:dind service]

FROM ibm-semeru-runtimes:open-17.0.5_8-jdk-jammy

# Install docker

RUN curl -fsSL get.docker.com -o get-docker.sh && sh get-docker.sh

[Listing 2 - Snippet from subscriptions/deployment/docker/java-builder/Dockerfile used to build Docker image having tag 1.1.1 that has docker tool installed. Job script will be run in the context of this image, see also build container image in screenshot below]

When you have an existing Kubernetes cluster for tooling and need to scale your build infrastructure, the GitLab Runner will run the jobs on the tooling Kubernetes cluster. In this way, each job will have its own pod. This Pod is made up of, at the very least, a build container, a helper container, and an additional container for each service defined in the .gitlab-ci.yml or config.toml files.

Definition from GitLab: “Docker-in-Docker” (dind) means:

- Your registered GitLab runner uses the Docker executor or the Kubernetes executor.

- The executor uses a container image of Docker, provided by Docker, to run your CI/CD jobs.

config.template.toml: |

[[runners]]

[runners.kubernetes]

image = "docker:git"

privileged = true

[Listing 3 - Snippet from initial build/subscriptions-gitlab-runner Kubernetes ConfigMap The –privileged flag gives all capabilities to the container, and it also lifts all the limitations enforced by the device cgroup controller. In other words, the container can then do almost everything that the host, hereby Kubernetes worker node, can do. This flag exists to allow special use-cases, like running Docker-in-Docker.]

When editing above ConfigMap by setting privileged = false, and then restarting the subscriptions-gitlab-runner deployment, the pipeline jobs would start failing with message a connection could not be established to the Docker daemon.

The Kubernetes project also deprecated Docker as a container runtime after v1.20, and containerd became the default container runtime since v1.22.

The dockershim component of Kubernetes, that allows to use Docker as a container runtime for Kubernetes, was removed in release v1.24.

The privileged mode required for running docker:dind, as well as complying with Kubernetes cluster security guidelines on keeping components up-to-date, are pushing towards finding alternatives for Docker commands in the context of GitLab CI/CD jobs.

Kubernetes and Docker Don’t Panic: Container Build Tool Options Galore

Reading the Kubernetes documentation it became fast obvious, I could still keep using Dockerfile’s, the textual definition of how to build container images, I just needed to swap the build tool to one that is rootless by design: “The image that Docker produces isn’t really a Docker-specific image—it’s an OCI (Open Container Initiative) image. Any OCI-compliant image, regardless of the tool you use to build it, will look the same to Kubernetes. Both containerd and CRI-O know how to pull those images and run them.”

My process of choosing a rootless by design container build tool started with making an inventory of available open source options:

- Google’s kaniko and jib

- Red Hat’s podman and buildah

- Cloud Native Buildpacks and Paketo from the CNCF projects landscape

I gave up on kaniko after I’ve stumbled on this performance issue report, and one colleague from Operations asked me whether I ran into this error, which was fixed by removing the mail symbolic loop. One of the pipelines I needed to refactor was pretty slow already, thus I was looking for an option at least equally performant or faster compared to dind.

Next I dived into jib which takes a Java developer friendly approach with no Dockerfile to maintain and provides plugins to integrate with Maven or Gradle. I’ve started a proof-of-concept (POC) on the simpler pipeline I needed to refactor, a Java Spring Boot application for AWS Simple Queue Service (SQS) dead-letter-queue management, building code with Gradle tool. In the POC, first step was to build the container image with jib Gradle plugin, and then publish it to Amazon Elastic Container Registry (ECR), using the easiest, most straight forward authentication method:

...

plugins {

id 'java'

id 'org.springframework.boot' version '2.7.5'

...

id "com.google.cloud.tools.jib" version "3.3.1"

}

...

apply plugin: "com.google.cloud.tools.jib"

springBoot {

bootJar {

layered {

// application follows Boot's defaults

application {

intoLayer("spring-boot-loader") {

include "org/springframework/boot/loader/**"

}

intoLayer("application")

}

// for dependencies we also follow default

dependencies {

intoLayer("dependencies")

}

layerOrder = ["dependencies", "spring-boot-loader", "application"]

}

buildInfo {

properties {

// ensure builds result in the same artifact when nothing's changed:

time = null

}

}

}

}

jib.from.image='xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/java-service:2.3.0'

jib.to.image='xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/subscriptions-sqs-dlq-mgmt'

jib.from.auth.username='AWS'

jib.to.auth.username='AWS'

jib.to.auth.password='***************************'

jib.from.auth.password='***************************'

jib.to.tags = ['build-jib-latest']

jib.container.ports=['8080']

jib.container.user='1000'

[Listing 4 - build.gradle snippet exemplifying jib integration with obfuscated AWS account in ECR repositoryUri, obfuscated ECR password]

➜ subscriptions-sqs-dlq-mgmt (SUBS-3835-JIB) ✗ ./gradlew jib

> Task :jib

Containerizing application to xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/subscriptions-sqs-dlq-mgmt, xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/subscriptions-sqs-dlq-mgmt:build-jib-latest...

Base image 'xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/java-service:2.3.0' does not use a specific image digest - build may not be reproducible

Using credentials from to.auth for xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/subscriptions-sqs-dlq-mgmt

Executing tasks:

Executing tasks:

Executing tasks:

[== ] 6.3% complete

The base image requires auth. Trying again for xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/java-service:2.3.0...

Using credentials from from.auth for xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/java-service:2.3.0

Executing tasks:

Using base image with digest: sha256:943e7cfcf79149a3e549f5a36550c37d318aaf7d84bb6cfbdcaf8672a0ebee98

Executing tasks:

Executing tasks:

[========== ] 33.3% complete

Executing tasks:

[========== ] 33.3% complete

> checking base image layer sha256:223828f10a72...

Executing tasks:

[========== ] 33.3% complete

> checking base image layer sha256:223828f10a72...

> checking base image layer sha256:1b4b24710ad0...

Executing tasks:

[========== ] 33.3% complete

> checking base image layer sha256:223828f10a72...

> checking base image layer sha256:1b4b24710ad0...

Executing tasks:

[========== ] 33.3% complete

> checking base image layer sha256:223828f10a72...

> checking base image layer sha256:1b4b24710ad0...

> checking base image layer sha256:cf92e523b49e...

> checking base image layer sha256:0b7a45bc802a...

Executing tasks:

[========== ] 33.3% complete

> checking base image layer sha256:223828f10a72...

> checking base image layer sha256:1b4b24710ad0...

> checking base image layer sha256:cf92e523b49e...

> checking base image layer sha256:0b7a45bc802a...

Executing tasks:

[========== ] 34.7% complete

> checking base image layer sha256:223828f10a72...

> checking base image layer sha256:cf92e523b49e...

> checking base image layer sha256:0b7a45bc802a...

Executing tasks:

[=========== ] 36.1% complete

> checking base image layer sha256:223828f10a72...

> checking base image layer sha256:0b7a45bc802a...

Executing tasks:

[=========== ] 37.5% complete

> checking base image layer sha256:223828f10a72...

Executing tasks:

[============ ] 38.9% complete

Executing tasks:

Container entrypoint set to [java, -cp, @/app/jib-classpath-file, nl.nederlandseloterij.subway.sqsdlqmgmt.SqsDlqMgmtApplication]

Executing tasks:

Executing tasks:

Executing tasks:

Built and pushed image as xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/subscriptions-sqs-dlq-mgmt, xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/subscriptions-sqs-dlq-mgmt:build-jib-latest

Executing tasks:

[============================ ] 91.7% complete

> launching layer pushers

BUILD SUCCESSFUL in 14s

4 actionable tasks: 3 executed, 1 up-to-date

➜ subscriptions-sqs-dlq-mgmt (SUBS-3835-JIB) ✗

[Listing 5 - ./gradlew jib output with obfuscated AWS account in ECR repositoryUri’s]

I went through documentation to get more advanced and secure ways of authenticating to Elastic Container Registry (ECR) with jib, and found alternatives:

- credential helper docker-credential-ecr-login. This would require docker deamon, so not getting rid of privileged mode.

- do a docker login or podman login in a pipeline job script, to have a credential file written to default places where Jib searches.

The docker login was a no-brainer, that was what the pipeline job script was already using, i.e.:

aws ecr get-login-password --region eu-west-1 | docker login --username AWS --password-stdin xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions

or on own machine using aws-vault:

aws-vault exec ecr -- aws ecr get-login-password --region eu-west-1 | docker login --username AWS --password-stdin xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions

[Listing 6 - ECR authentication in pipeline script and local machine using docker login with obfuscated AWS account in ECR repositoryUri]

docker login creates or updates

$HOME/.docker/config.jsonfile, which contains in “auths” a list of authenticated registries and the credentials store, like “osxkeychain” on macOS.

The podman login proved to be exactly what I was looking for. Podman is a open-sourced Red Hat product designed to build, manage and run containers with a Kubernetes-like approach.

- Daemon-less - Docker uses a deamon, the ongoing program running in the background, to create images and run containers. Podman has a daemon-less architecture which means it can run containers under the user starting the container.

- Rootless - Podman, since it doesn’t have a deamon to manage its activity, also dispenses root privileges for its containers.

- Drop-in-replacement for docker commands: Podman provides a Docker-compatible command line front end that can simply alias the Docker cli. One catch is that for the build command Podman behavior is to call buildah bud. Buildah provides the build-using-dockerfile (bud) command that emulates Docker’s build command

- both Podman and Buildah are actively maintained, after being open sourced by Red Hat, and there is a community and plenty of documentation around them. Red Hat is also using Podman actively in RedHat and CentOS versions 8 and higher.

On local machines where one has aws-vault installed and configured, podman login command is:

aws-vault exec ecr -- aws ecr get-login-password --region eu-west-1 | podman login --username AWS --password-stdin xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptionsand podman login will create or update file

$HOME/.config/containers/auth.json, and will then store the username and password from STDIN as a base64 encoded string in “auth” for each authenticated registry listed in “auths”. See here podman-login full docs.

Thus Podman is a much better fit than jib for existing pipelines intensively using scripting with Docker commands. I definitely recommend jib when starting a Java project from scratch. I’ve even seen jib successfully in action with skaffold which is a command line tool open sourced by Google, that facilitates continuous development for container based & Kubernetes applications.

Unprivileged Docker/OCI Container Image Builds: Implementation using Podman

Update build container base image

FROM ibm-semeru-runtimes:open-17.0.5_8-jdk-jammy

RUN apt-get update && apt-get install -y podman git jq gettext unzip && rm -rf /var/lib/apt/lists/*

ENV LANG en_US.UTF-8

# Install AWS cli v2

RUN curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" \

&& unzip awscliv2.zip \

&& ./aws/install -b /bin \

&& aws --version

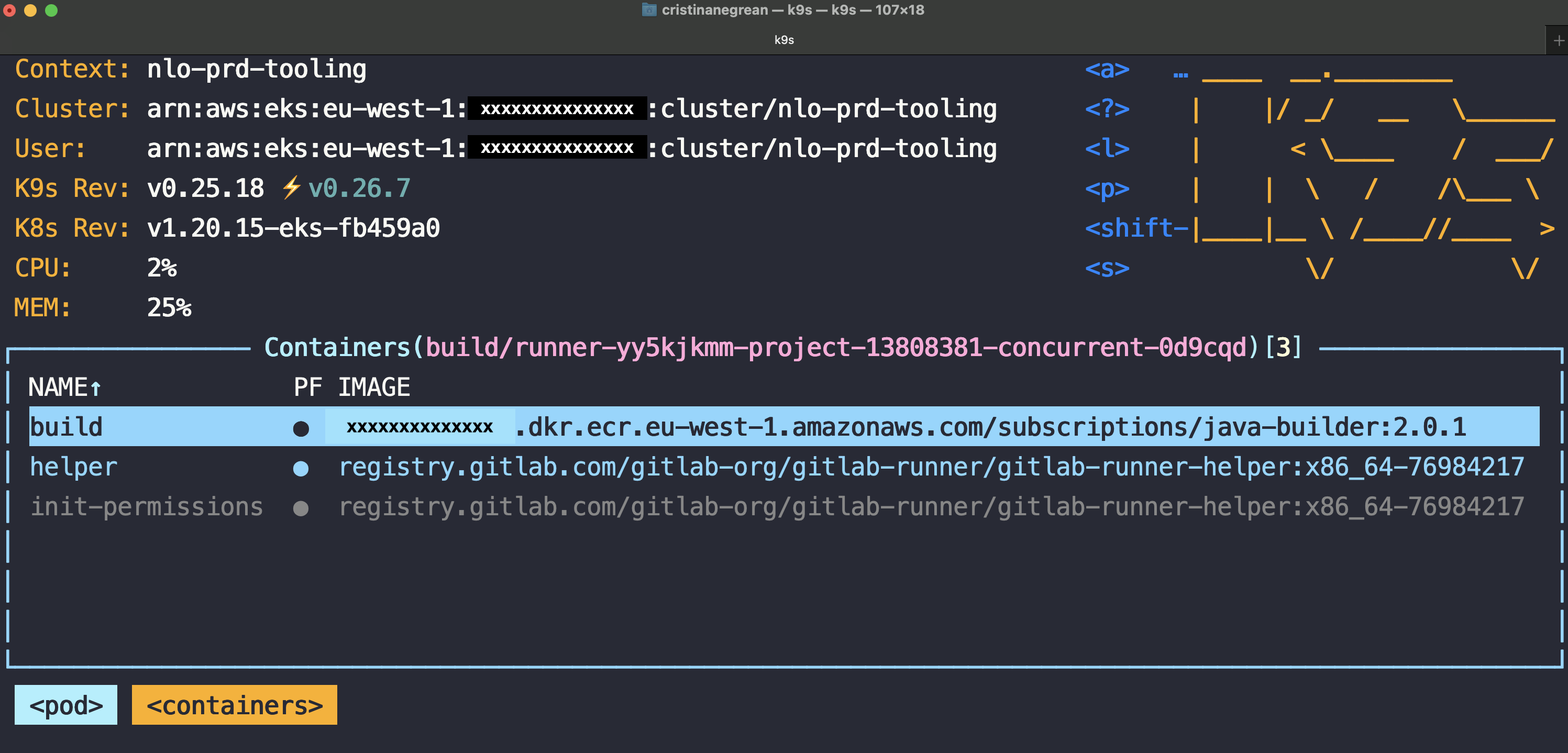

[Listing 7 - Snippet from subscriptions/deployment/docker/java-builder/Dockerfile used to build Docker image having tag 2.0.1 that has podman installed instead of docker]

Refactor docker commands in job scripts to use podman

1) Authenticating to AWS ECR: simply replace docker with podman:

echo "Login in into ECR $ECR_URL"

aws ecr get-login-password --region eu-west-1 | podman login --username AWS --password-stdin $ECR_URL

[Listing 8 - Snippet from subscriptions/deployment/auth/auth-to-tooling-ecr.sh script]

2) Build and publishing Docker container images: simply replace docker with podman:

echo $(podman --version)

EXTRA_GRADLE_OPTS="--no-daemon sonarqube -Dsonar.host.url=${SONAR_URL} -Dsonar.login=${SONAR_LOGIN_TOKEN}"

PUBLISH_FUNCTION="publish_service_ecr"

function publish_service_ecr {

DOCKER_IMAGE_BUILD_PATH=$1;

DOCKER_IMAGE_NAME=$2;

echo "Building image $DOCKER_IMAGE_NAME at path $DOCKER_IMAGE_BUILD_PATH"

BRANCH_TAG="${DOCKER_IMAGE_NAME}:${CI_COMMIT_REF_SLUG}_${CI_PIPELINE_ID}"

LATEST_TAG="${DOCKER_IMAGE_NAME}:latest_${CI_COMMIT_REF_SLUG}"

APP_VERSION=`cat ../VERSION`

BUILD_TAG="${DOCKER_IMAGE_NAME}:build_${APP_VERSION}_${CI_PIPELINE_ID}"

TAGS="-t ${BRANCH_TAG} -t ${BUILD_TAG} -t ${LATEST_TAG}"

#copy the project root VERSION file to the docker-image for this service

cp ../VERSION ${DOCKER_IMAGE_BUILD_PATH}/build/VERSION

#extract the libs jars

cd ${DOCKER_IMAGE_BUILD_PATH}/build/libs

java -Djarmode=layertools -jar *.jar extract

cd -

podman build \

--build-arg PIPELINE_ID_ARG=${CI_PIPELINE_ID} \

${TAGS} \

-f docker/java-service/Dockerfile-build \

${DOCKER_IMAGE_BUILD_PATH}

podman push "${BRANCH_TAG}"

podman push "${BUILD_TAG}"

podman push "${LATEST_TAG}"

}

#BUILD

../gradlew --build-cache --stacktrace --parallel --max-workers 4 build -p ../ $EXTRA_GRADLE_OPTS

SERVICES=$(cat services | xargs)

#PUBLISH

mkdir -p tmp

for SERVICE in $SERVICES

do

echo "Publishing service: $SERVICE";

#authenticate to ECR

auth/auth-to-tooling-ecr.sh

#call publish_service_ecr function with container image build path and image name input parameters

#redirect stderr and stdout to temporary log file

$PUBLISH_FUNCTION ../subscriptions/$SERVICE/$SERVICE-app xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/$SERVICE-app 2> tmp/publish_service_$SERVICE.log > tmp/publish_service_$SERVICE.log &

done;

[Listing 9 - Snippet from subscriptions/deployment/build.sh script with obfuscated AWS account in ECR repositoryUri]

where DOCKER_IMAGE_BUILD_PATH is the Gradle module path of each application service, i.e. ./subscriptions/batch/batch-app for batch (micro)service.

That is because application service Dockerfile docker/java-service/Dockerfile-build

references the gradle module build output, with SpringBoot jar layering order:

dependencies, spring-boot-loader, libs-dependencies and application.

FROM xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/java-service:2.3.1

ARG PIPELINE_ID_ARG=000000

ENV PIPELINE_ID=${PIPELINE_ID_ARG}

COPY build/libs/dependencies/ ./

COPY build/libs/spring-boot-loader/ ./

COPY build/libs/libs-dependencies/ ./

COPY build/libs/application/ ./

COPY build/VERSION ./

[Listing 10 - subscriptions/deployment/docker/java-service/Dockerfile-build file with obfuscated AWS account in ECR repositoryUri. Container image java-service having tag 2.3.1 includes a service user and can run as non root.]

The pipeline has also promote and hotfix flows, for those the refactor was equally a drop-in replacement of docker command with podman command.

The deploy flows are using Helm template and kubectl apply utilities and did not use Docker commands. As stated as well earlier, a Docker image isn’t really a Docker-specific image—it’s an OCI (Open Container Initiative) image. Any OCI-compliant image, regardless of the tool you use to build it, will look the same to Kubernetes no matter which container runtime: containerd, CRI-O, Docker, etc. All container runtimes will know how to pull those (OCI) images, from AWS ECR here, and run them.

Remove docker:dind sevice from .gitlab-ci.yml

build:

stage: build

image: xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/subscriptions/java-builder:2.0.1

script:

- deployment/build.sh

[Listing 11 - Snippet from subscriptions/.gitlab-ci.yml file: build step without docker:dind service]

As you can see in below screenshot, there is no more svc-0 container with a docker:dind image, and job is run in the context of build container with new image tag 2.0.1:

Drop privileged mode for Kubernetes executor Gitlab runner deployment configuration

config.template.toml: |

[[runners]]

[runners.kubernetes]

image = "ubuntu:22.04"

privileged = false

[Listing 12 - Snippet from updated build/subscriptions-gitlab-runner Kubernetes ConfigMap with –privileged flag toggled off]

Conclusion

As a result, getting rid of dind:

- facilitates upgrading Kubernetes version on tooling cluster to a version where Docker is not default runtime, by making sure no privileged pods execute Docker commands.

- improves Kubernetes cluster security, since dropping privileged mode for containers on tooling cluster worker nodes.

- facilitates Kubernetes application security, on podman run usage, as containers in Podman do not have root access by default.

Reference Shelf

I did not reinvent the wheel, there are others which have discovered Podman as a Docker alternative before me: